Vatsal Shah

Certified ScrumMaster® | Agile Technical Project Manager

AI Speed Without Decision Quality: The Risk Leaders Ignore

AI speed without decision quality is becoming one of the biggest hidden risks in modern product organizations.

AI is making work faster everywhere. Drafts appear in minutes. Prototypes arrive in hours. Releases happen in days.

AI is making work faster everywhere.

Drafts appear in minutes. Prototypes arrive in hours. Releases happen in days.

But there is a quiet leadership problem underneath the productivity story:

AI speed without decision quality creates more risk than value.

When output is cheap, weak decisions scale faster.

Teams ship more. Customers feel less trust. Leaders spend more time correcting than compounding.

This article is for product leaders, tech leaders, and senior managers who want AI leverage without chaos.

No hype. No fear. Just operating clarity.

Why AI speed without decision quality is increasing everywhere

AI is accelerating speed for three simple reasons.

1) The cost of first drafts is collapsing.

Specs, user stories, QA test ideas, onboarding copy, and even code scaffolds can be generated quickly.

That removes the “blank page” delay.

2) The feedback loop is shorter.

Teams can simulate flows, explore edge cases, and validate messaging before full build cycles.

You see something sooner, so you decide sooner.

3) Coordination friction is lower.

AI can summarize meetings, propose alternatives, and produce artifacts that reduce back-and-forth.

Less waiting means more motion.

This is real productivity.

But here’s the catch: speed is not automatically progress.

Speed is just throughput.

If the decision layer is weak, faster throughput amplifies waste.

The hidden cost of AI speed without decision quality

Most leaders can see the upside of speed.

Fewer can see the downside until it shows up as damage.

The hidden costs look like this

High-velocity rework

You ship faster, but you undo faster too.

Product teams spend cycles correcting decisions that were never grounded.

Confidence inflation

AI outputs sound fluent.

Fluency can be mistaken for correctness, completeness, or strategy alignment.

Decision debt

Just like tech debt, decision debt accumulates when tradeoffs are skipped.

It shows up later as misalignment, brittle systems, unclear ownership, and “why did we build this?”

Trust erosion

Customers don’t measure your team by how fast you shipped.

They measure you by whether the product feels reliable, safe, and coherent.

Team fatigue

Fast shipping with unclear priorities creates emotional churn.

People feel busy but not effective. That kills morale and retention.

In short:

AI speed without decision quality converts time into motion, not value.

What decision quality actually means

Decision quality is not “being slow.”

It is being clear.

High decision quality has five visible traits.

1) Clear problem framing

The team can answer, in plain words:

- What customer pain are we solving?

- What job-to-be-done becomes easier?

- What is the cost of not solving it?

If the framing is vague, AI will produce volume without direction.

2) Explicit tradeoffs

Every meaningful decision has tradeoffs:

- speed vs reliability

- growth vs trust

- personalization vs privacy

- experimentation vs brand consistency

Decision quality means tradeoffs are stated and chosen, not ignored.

3) Measurable success signals

A decision is stronger when success is measurable:

- Activation improves by X

- Time-to-first-value drops by Y

- Support tickets drop by Z

- Change failure rate stays below threshold

If you can’t measure it, you can’t manage it.

4) Ownership and accountability

Someone must own:

- the outcome metric

- the risk boundaries

- the rollout plan

- the rollback trigger

AI can assist decisions.

It cannot replace accountability.

5) Reversibility thinking

Good teams classify decisions:

- Reversible (small bets, fast rollback)

- Hard to reverse (data policy, architecture, pricing, trust)

This prevents “fast irreversible mistakes.”

Decision quality is a system.

AI exposes whether that system exists.

How AI speed without decision quality amplifies weak systems

AI does not “cause” bad outcomes.

This is why AI speed without decision quality doesn’t just fail quietly — it compounds risk at scale.

It accelerates whatever your organization already is.

If your decision system is weak, AI will amplify:

Weak prioritization → more scattered roadmaps

AI generates endless “good ideas.”

Without strong prioritization, teams chase noise.

Unclear quality bars → more defects and inconsistency

AI-generated output varies.

If review standards are unclear, variation becomes customer-facing instability.

Poor measurement → more activity with no learning

Teams ship faster but don’t learn faster.

That is the fastest path to wasted quarters.

Unowned outcomes → blame loops

When results are unclear, teams default to narratives:

- “The model got it wrong.”

- “Requirements weren’t clear.”

- “We moved fast.”

Strong teams don’t accept that.

They design the workflow so failure modes are visible early.

A practical leadership reminder:

AI increases speed, not clarity.

Global principles such as the OECD AI Principles reinforce the importance of human-centered, accountable decision-making alongside AI adoption.

Leaders must protect clarity.

This aligns closely with the ideas discussed in AI Decision-Making Framework for Leaders where decision clarity determines whether AI accelerates value or confusion.

Impact on product growth & teams

AI speed without decision quality impacts growth in predictable ways.

When AI speed without decision quality drives product changes, growth metrics become noisy instead of meaningful.

Growth impact #1: Activation becomes noisy

Fast changes to onboarding, copy, flows, and prompts create unstable first-time experiences.

Even small inconsistencies reduce trust in the first session.

Growth impact #2: Retention suffers quietly

Retention is sensitive to reliability.

If AI-assisted releases increase edge-case failures, customers leave silently.

Growth impact #3: Experimentation becomes theater

More A/B tests run, but fewer produce insight.

Why? Because hypotheses are weak and measurement is incomplete.

Growth impact #4: Support load rises

If AI creates “fast wrong,” customers don’t blame the model.

They blame your product.

Team impact #1: More context switching

AI makes it easy to start new work.

Teams end up with too many parallel threads and fewer completed outcomes.

Team impact #2: Trust inside the org declines

When leadership pushes speed without clear decision standards, teams feel exposed.

People become cautious. Psychological safety drops.

Team impact #3: Senior talent is underused

Strong engineers and PMs become editors of endless AI output.

That is a poor use of high judgment.

Product growth is not powered by output volume.

It is powered by correct decisions compounded over time.

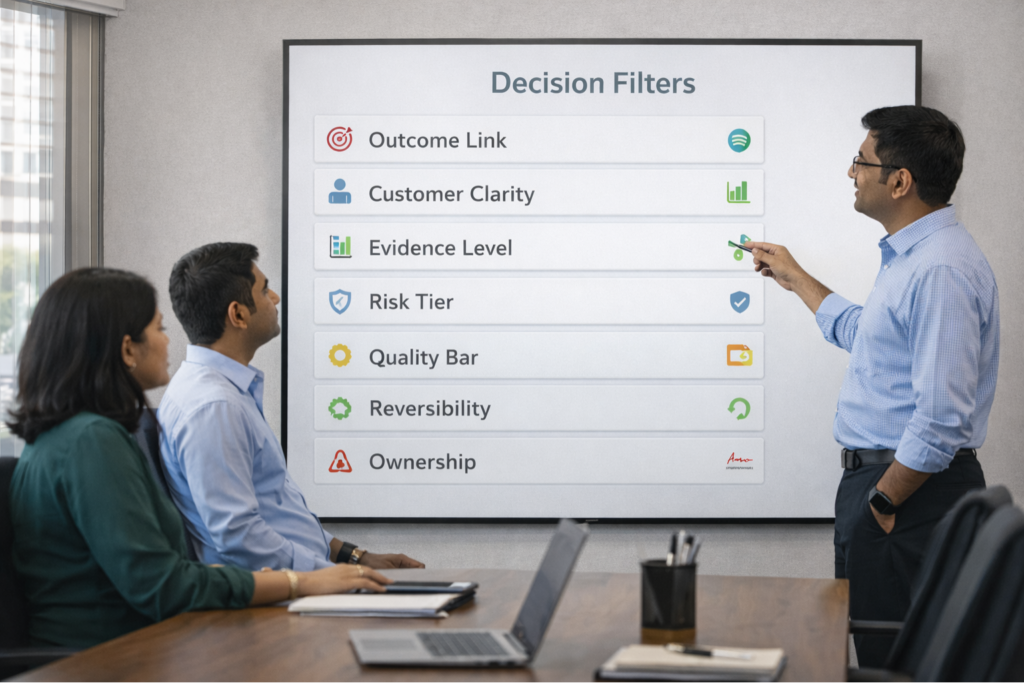

Decision filters to control AI speed without decision quality risk

If you want AI speed with decision quality, you need filters.

Not heavy governance. Just consistent thinking.

Below are 7 decision filters that disciplined leaders use before scaling anything AI-assisted.

Filter 1: Outcome link

Which single metric moves if this works?

If you can’t name it, it’s not ready.

Filter 2: Customer clarity

What customer pain becomes meaningfully easier?

If it’s only internal excitement, it’s a distraction.

Filter 3: Evidence level

What proof do we have in our environment?

Prefer internal validation over vendor demos.

Filter 4: Risk tier

What is the downside if this fails?

Consider:

- privacy exposure

- compliance issues

- reputation damage

- customer trust loss

Use NIST-style risk thinking as a reference point for making risk explicit in AI decisions.

Filter 5: Quality bar

What does “good” mean here?

Define review depth, accuracy threshold, brand constraints, and safety boundaries.

Filter 6: Reversibility

Can we roll back safely in minutes or hours?

If not, slow down and add guardrails.

Filter 7: Ownership

Who signs their name to the decision and the outcome?

If ownership is unclear, scale will break.

These filters turn AI speed into responsible momentum.

How disciplined teams prevent AI speed without decision quality

Disciplined teams don’t reject speed.

Disciplined teams understand that AI speed without decision quality must be constrained by ownership, guardrails, and outcome metrics.

They redesign the operating model so speed produces learning and value.

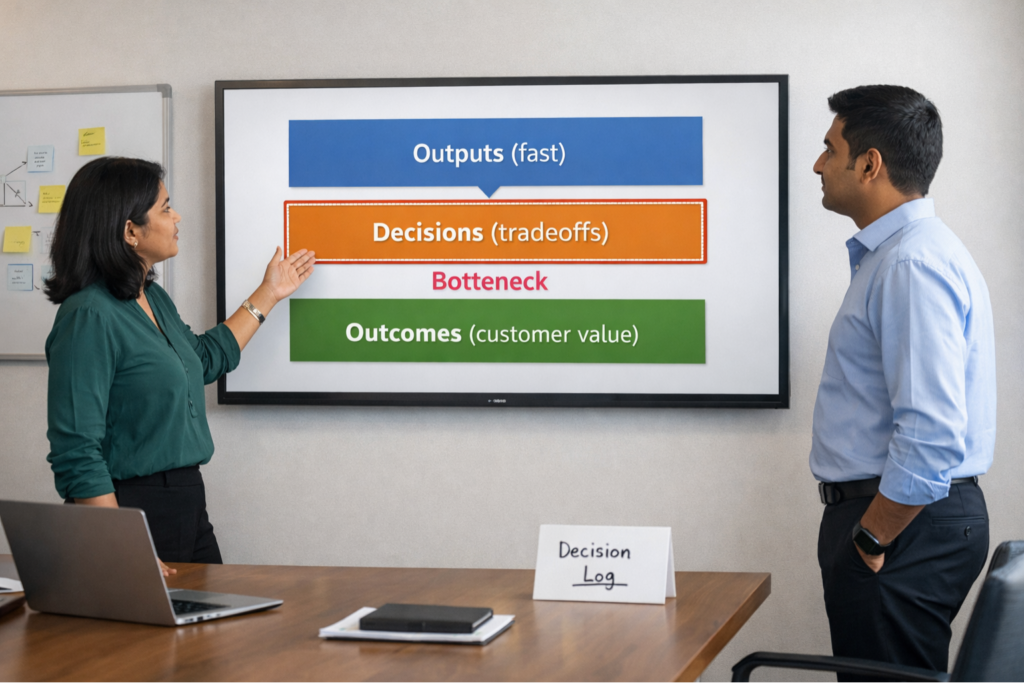

1) They separate outputs, decisions, and outcomes

They explicitly track:

- Outputs: what we produced

- Decisions: what we chose and why

- Outcomes: what changed for customers

If decisions are not visible, AI will flood outputs.

2) They maintain a decision log

A simple weekly log answers:

- What did we decide?

- What tradeoff did we accept?

- What signal will validate it?

- When will we revisit?

This prevents “quiet drift.”

3) They pair speed metrics with stability metrics

They don’t celebrate speed alone.

They watch stability indicators too (DORA-style thinking is useful here):

- Lead time for changes

- Deployment frequency

- Change failure rate

- Time to restore service

Speed plus stability is how you move fast safely.

4) They design “human + AI” roles clearly

A clean pattern looks like:

- AI drafts → humans decide

- AI proposes options → humans pick tradeoffs

- AI accelerates execution → humans verify quality

- AI summarizes → humans interpret and act

AI is leverage. Humans are judgment.

5) They treat guardrails as a growth enabler

They build lightweight rules:

- data handling boundaries

- approved tools by sensitivity tier

- review requirements by risk tier

- audit trails for high-impact decisions

The distinction between outputs and outcomes is explored further in AI tool speed vs product outcomes especially for product and engineering leaders.

This reduces incidents and increases confidence.

A real-life pattern many leaders recognize

A product org enables AI across PRDs, code, and marketing pages.

In three weeks, output doubles.

Then, four things happen:

- activation stays flat

- defects rise

- support tickets increase

- teams argue about priorities

Why?

AI speed without decision quality multiplied activity, not direction.

The fix is not “use less AI.”

The fix is “upgrade decision quality.”

Closing reflection

AI will keep getting faster.

That is not the differentiator.

The differentiator will be leadership teams who can say:

- “We know which decisions matter most.”

- “We know the tradeoffs we accept.”

- “We know how we measure success.”

- “We know how we control risk.”

- “We know who owns outcomes.”

If you remember one line, make it this:

AI speed without decision quality creates more risk than value.

Speed is common. Decision quality is rare. That’s the advantage.

AI speed without decision quality creates more risk than value — not because AI is flawed, but because leadership judgment is irreplaceable.