Vatsal Shah

Certified ScrumMaster® | Agile Technical Project Manager

Tool Speed vs Decision Quality: The Hidden Leadership Risk That Can Kill Growth

In 2025, the most dangerous leadership confusion is simple: tool speed vs decision quality looks like progress—until you measure outcomes.

In modern organizations, tools accelerate action, not judgment, which is why leadership must actively protect decision quality as speed increases.

AI tools can draft strategy docs in minutes, generate prototypes in hours, and ship iterations in days. That acceleration is real. But here’s the uncomfortable truth senior leaders must internalize:

Tools accelerate action, not judgment.

If your org gets faster at producing outputs without getting better at making decisions, you won’t just waste time—you’ll scale the wrong work with confidence.

Use this as your “Blog hub” reference early in the article: Read more leadership & AI decision posts

Why “Speed” Became Cheap (And Why That’s a Problem)

A decade ago, speed was a competitive advantage because execution was expensive. Today, tools compress execution cost so dramatically that speed is no longer a moat. The moat is decision quality—what you choose, what you reject, and what you protect.

When leaders don’t adjust their operating model, three failure modes appear:

- High-velocity waste: More output, same outcomes.

- Confident wrongness: AI-generated certainty hides weak assumptions.

- Trust erosion: Faster shipping increases risk if reliability and governance don’t keep up.

This is the real leadership gap behind tool speed vs decision quality:

Tools over-optimize activity; leaders must protect judgment.

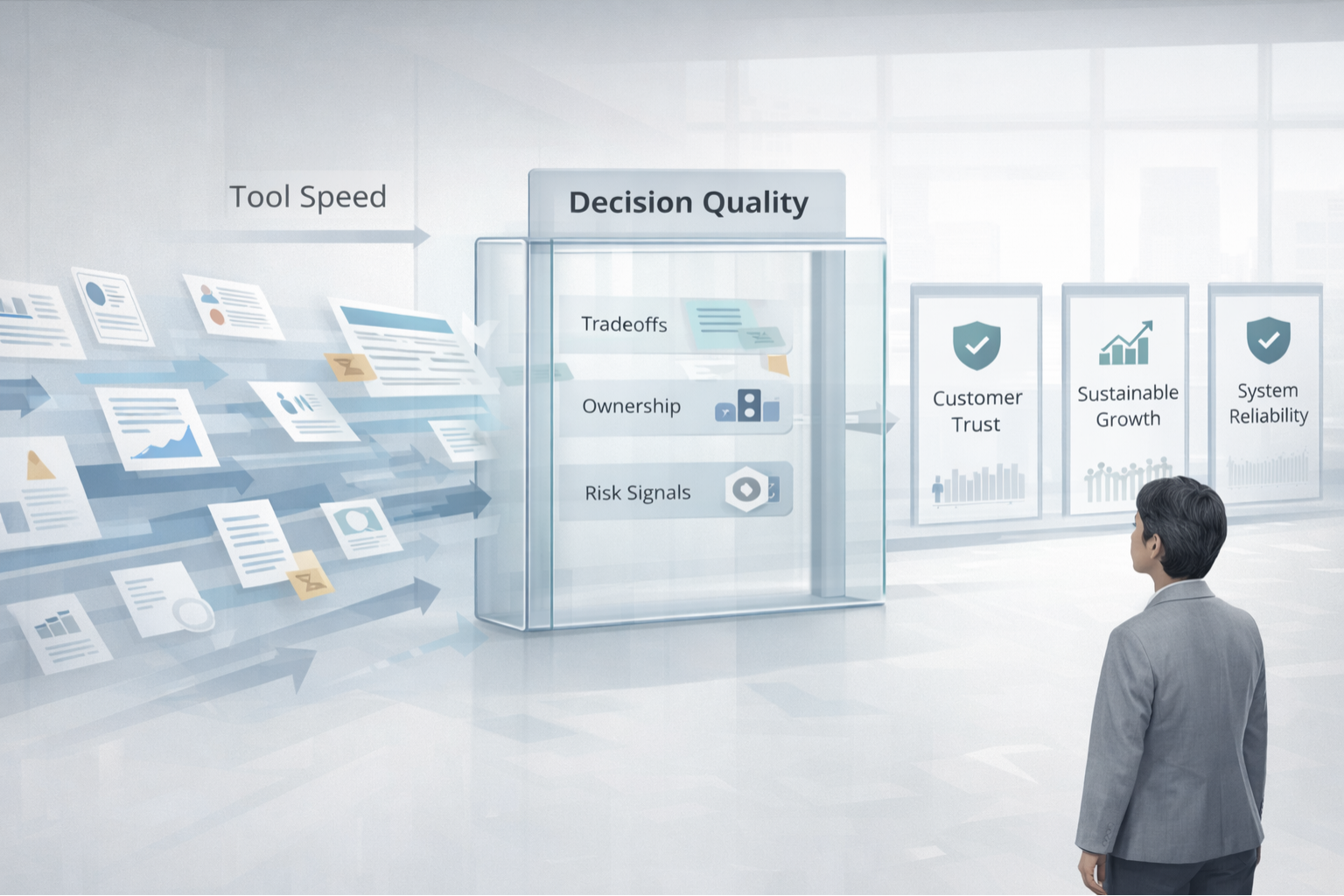

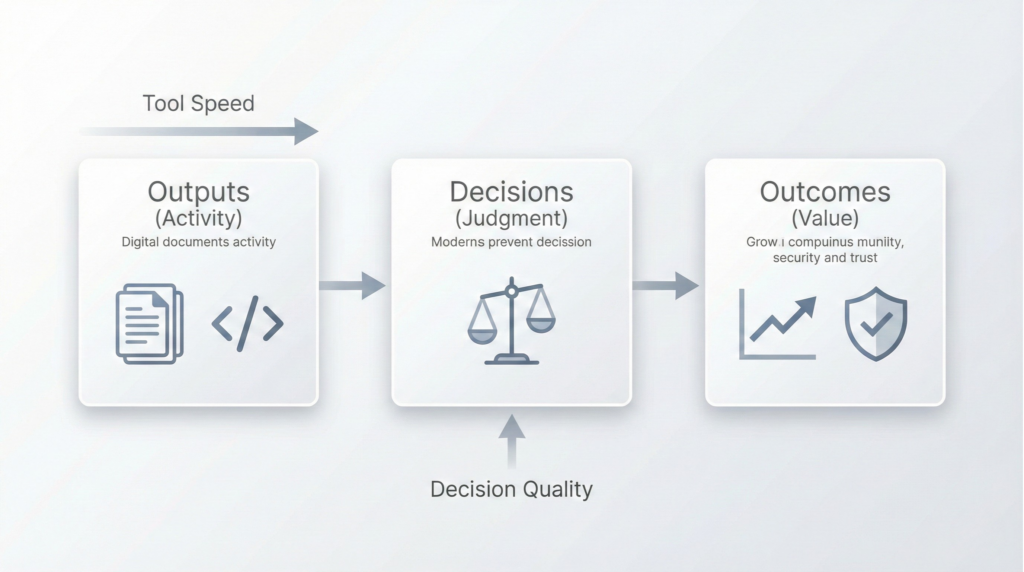

Tool Speed vs Decision Quality: A Practical Decision Quality Framework for Leaders

Think of modern execution as three layers:

- Activity layer (Outputs): drafts, code, PRDs, campaigns, designs, tickets

- Decision layer (Judgment): tradeoffs, sequencing, constraints, ownership

- Outcome layer (Value): activation, retention, revenue, reliability, trust

AI multiplies the Activity layer.

Only leadership can upgrade the Decision layer.

Customers reward the Outcome layer.

If you don’t explicitly build decision discipline, the Activity layer will expand until it drowns the other two.

AI Tool Speed vs Product Outcomes (9 leadership moves)

Product & Growth Implications: Product Outcomes vs Outputs

When decision quality lags behind tool speed, growth doesn’t just slow—it becomes noisy. You’ll see symptoms like:

1) Activation stalls even while shipping increases

Teams celebrate “more releases,” but activation stays flat because the product isn’t removing the single biggest friction point.

Leadership move: One “north friction” per quarter. Force every AI-accelerated initiative to map to that.

2) Retention drops due to reliability and coherence issues

Tool speed can increase change volume. Without guardrails, teams degrade stability. Many orgs now pair velocity with stability metrics (like the DORA “four keys”) to prevent “fast breakage.”

To avoid confusing activity with outcomes, leaders often align AI-accelerated delivery with DORA’s four key metrics for software delivery performance, ensuring speed does not compromise reliability or quality.

Leadership move: Require every team to report speed + stability together (not separately).

3) Brand trust erodes quietly

AI-generated content and rapid UI changes can feel inconsistent. Customers don’t call it “AI.” They call it “confusing,” “buggy,” or “unreliable.”

Leadership move: Define “voice + quality bars” for anything customer-facing—copy, onboarding, support, and UX patterns.

4) Roadmaps turn into reaction loops

If every AI demo becomes a “must-ship,” you don’t have strategy—you have a dopamine pipeline.

Leadership move: Run an experiment portfolio, not a launch parade:

- Core outcomes (activation, retention, reliability)

- AI leverage (internal efficiency)

- Frontier bets (risky differentiators)

And set a rule: Frontier bets never steal oxygen from core outcomes.

Product Growth Strategy in the Age of AI

AI Leadership Responsibility: Why Judgment Must Have an Owner

In most organizations, tool adoption is treated like enablement: “roll out, train, measure usage.”

That framing is too small.

AI adoption is an operating model change. And operating models require ownership.

Here’s the leadership standard:

Ownership: “The model did it” is never an answer

AI can draft, suggest, and generate. It cannot own outcomes.

Make ownership explicit:

- every AI-assisted asset has a named owner

- every high-impact decision has a decision log

- every metric movement has a responsible leader

Risk Signals: AI Governance Guardrails That Enable Safe Speed

If you don’t govern AI, you’ll govern incidents.

Use a practical risk frame (simple language, real guardrails). The NIST AI Risk Management Framework (AI RMF) is a strong reference for leaders building trustworthy, safe AI adoption without killing velocity.

Tradeoffs: Speed is not the goal—outcomes are

Leaders must force tradeoffs into daylight:

- What are we optimizing—activation, retention, revenue, reliability, or trust?

- What are we willing to slow down to protect quality?

- What is reversible vs irreversible?

Practical Guidance — A 30-Day “Decision Quality” Upgrade

If you want action without chaos, run this 30-day operating cadence.

Week 1 — Pick two workflows (one customer-facing, one internal)

Examples:

- Customer-facing: onboarding flow improvement (time-to-first-value)

- Internal: support triage (resolution time + accuracy)

Define one metric each. No metric = no project.

Week 2 — Add decision guardrails before building

Minimum guardrails:

- baseline vs expected delta

- quality checklist (what “good” means)

- risk tier + data handling rules

- rollback plan (reversibility)

Week 3 — Ship + measure (instrument first)

Don’t ship blind. Instrument before release.

Week 4 — Decide (scale, modify, or kill)

Kill fast when outcomes don’t move. That is leadership maturity.

Real-life Example (Professional, leadership-relevant): The Prototype Trap

A founder enables AI coding + design tools. Within weeks:

- prototypes multiply

- stakeholders get excited

- the team looks “insanely productive”

But 6–8 weeks later:

- activation doesn’t improve

- retention is flat

- support load increases

- roadmap becomes reactive

Root cause: tool speed increased outputs, but leadership never clarified:

- the single customer pain to solve

- the success metric that defines value

- the non-negotiable quality + reliability bar

Fix: leadership resets the scoreboard to one outcome metric, introduces decision logs for major tradeoffs, and enforces a 14-day proof window for AI-accelerated work.

This cadence reinforces execution discipline for leaders without slowing teams or introducing unnecessary bureaucracy.

That’s how you win tool speed vs decision quality—by making judgment measurable.

Conclusion: Tools Don’t Create Winners—Leaders Do

Tools can accelerate action.

Only leaders can accelerate judgment.

If you want sustainable growth, treat tool speed vs decision quality as a leadership system:

- decisions get owners

- risk gets signals

- outcomes get a scoreboard

The question to ask this week:

Where is your org producing more AI-powered activity—without moving a single core outcome?