Vatsal Shah

Certified ScrumMaster® | Agile Technical Project Manager

AI Accelerates Execution — 9 Discipline Rules That Decide Outcomes

AI accelerates execution.

It does not automatically improve judgment.

In modern product teams, AI accelerates execution, but clarity still depends on human judgment and discipline.

That is the trap many product and delivery teams fall into.

With AI, you can draft a PRD in minutes, generate code in hours, and produce ten options before lunch. But speed without clarity creates a new kind of waste: fast confusion.

This article is for Product Managers, Tech Leads, Engineering Managers, and Delivery Leaders who want calm execution—without hype.

We’ll separate speed from progress, and we’ll outline practical discipline that keeps AI output connected to real outcomes.

Why AI Accelerates Execution but Not Clarity

AI reduces friction in producing artifacts.

It can summarize notes.

It can propose architectures.

It can generate test cases and UI copy.

That helps. But clarity comes from constraints—business goals, user reality, and delivery trade-offs. AI does not own those constraints. Your team does.

Here’s the simplest way to think about it:

- AI increases throughput (more output per unit time).

- Clarity increases direction (more correct decisions per unit time).

Throughput without direction looks productive.

But it often creates rework.

In senior delivery, the hidden cost is not “slow typing.”

It is misalignment.

AI can accelerate misalignment too.

If the problem statement is vague, AI will still generate confident content. That content may be coherent. It may be wrong.

A disciplined team treats AI as an accelerator for validated intent, not as a replacement for intent.

If you want a related mental model on filtering what matters in AI updates, this internal piece connects well: “AI Signals for Leaders: Improve Decision Quality Without Panic.”

Where teams confuse speed with progress

Execution teams usually confuse speed with progress in four places.

a) Output volume becomes the goal

More Jira tickets closed.

More story points burned.

More documents produced.

But outcomes do not care about volume. Outcomes care about value delivered and risk reduced.

b) “AI-made” artifacts bypass normal rigor

A generated PRD skips stakeholder review.

AI-written code skips architecture fit.

AI-generated test cases skip product risk thinking.

The workflow gets “faster,” but the delivery gets weaker.

c) The team ships motion, not learning

AI makes it easy to build quickly.

So teams build quickly.

But the goal is not building.

The goal is learning what changes user behavior.

A strong product growth lens is covered here (internal): “Product Growth Strategy in the Age of AI.”

d) The team compresses decisions too early

AI creates a false sense of certainty.

Instead of exploring options, teams pick the first “good-looking” answer. Then they execute fast—on the wrong direction.

Speed is only progress if it is aligned to a measurable outcome.

The role of execution discipline in AI-driven teams

Execution discipline is the set of behaviors that keep delivery connected to outcomes under pressure.

When AI accelerates execution, weak execution discipline becomes visible faster, not smaller.

In AI-driven teams, discipline matters more because:

- You can generate more options than you can evaluate.

- You can create more work-in-progress than you can finish.

- You can introduce more hidden defects than you can detect early.

This is why disciplined teams don’t ask, “Can AI do it?”

They ask:

- “What decision does this support?”

- “How will we verify it?”

- “What is the smallest proof that changes risk?”

- “What metric will move if we are right?”

Discipline is not bureaucracy.

It is a guardrail against fast waste.

For engineering + delivery alignment, it also helps to anchor on delivery performance metrics, not vibes. DORA’s “four keys” are a practical baseline for balancing velocity and stability.

Practical execution principles for product teams

Below are 9 execution principles that work especially well when AI is part of your workflow.

Principle 1: Start every initiative with an “Outcome Sentence”

One sentence. No jargon.

Template:

“We will improve [metric] for [user segment] by [behavior change] within [time window].”

If you can’t write this sentence, you are not ready to accelerate.

Principle 2: Define “progress evidence” before building

Before sprinting, define what proof looks like.

Examples:

- “3 customer calls confirm the workflow fits reality.”

- “Time-to-complete drops from 7 minutes to 4 minutes.”

- “Error rate reduces by 20% in a controlled rollout.”

AI can generate ideas.

Your team must define evidence.

Principle 3: Use AI to expand options, then constrain hard

A good pattern:

- AI generates options.

- Team selects 2–3 viable options.

- Team stress-tests with constraints (security, UX, ops, cost).

- Team commits.

This prevents “option overload” from becoming chaos.

Principle 4: Keep WIP brutally low

AI increases the temptation to start more.

But outcomes come from finishing.

Limit work in progress. Protect flow.

If you run Scrum, keep sprint scope stable.

If you run Kanban, enforce WIP limits like you mean it.

Principle 5: Treat AI output as a draft, not a decision

AI drafts:

- PRDs

- user stories

- test cases

- implementation approaches

Humans decide:

- scope boundaries

- acceptance criteria

- risk thresholds

- launch readiness

This separation avoids silent accountability gaps.

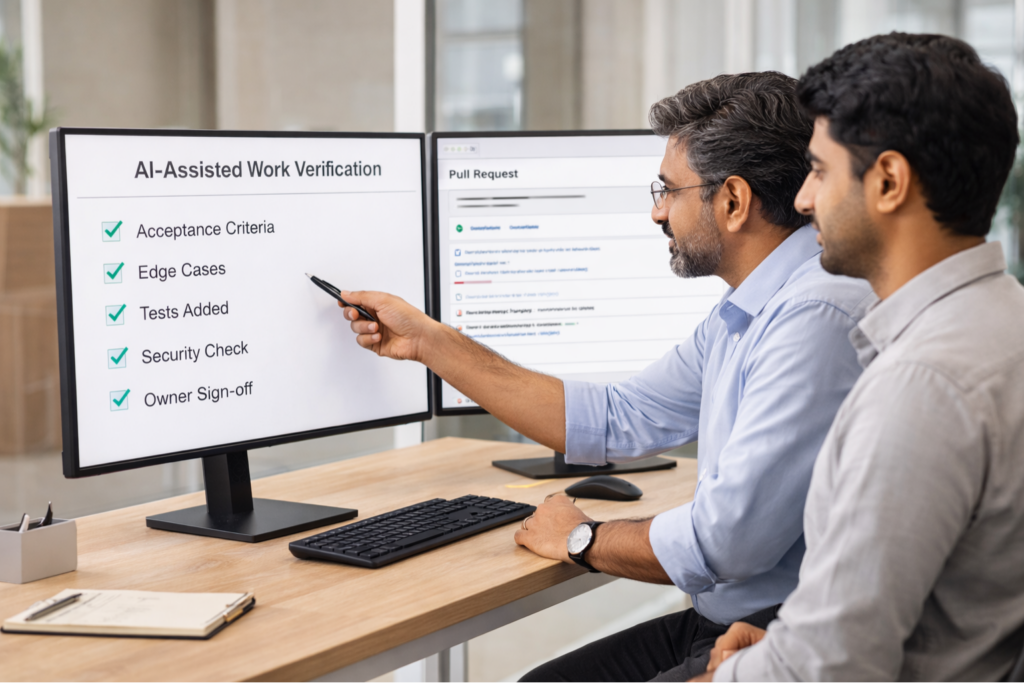

Principle 6: Add a “Verification Step” to every AI-assisted task

If AI helped produce it, define how you verify it.

- Code: tests + review checklist

- Requirements: stakeholder confirmation + edge cases

- UX copy: tone + compliance + clarity

- Data: sampling + reconciliation + anomaly checks

This one habit prevents most amplified mistakes.

Principle 7: Build a reusable “Prompt-to-Artifact” standard

Standardize how the team uses AI.

Example structure:

- Context (product + user + constraints)

- Task (what to generate)

- Format (template output)

- Quality checks (what must be true)

- Known risks (what to avoid)

Consistency beats brilliance.

Your team will ship faster and safer.

Vibe-coding style workflows become especially risky without standards; this internal article is a good reference point: “Vibe Coding Is Not a Developer Trend — It’s a Product and Growth Shift.”

Principle 8: Measure outcome, not AI activity

Do not measure:

- number of prompts

- number of generated pages

- number of AI-assisted commits

Measure:

- cycle time reduction

- defect rate trend

- customer satisfaction changes

- adoption and retention movement

If you need a practical lens on operational impact of modern AI updates, this internal guide is relevant: “Latest AI Model Updates: 7 Smart Changes for Leaders.”

Principle 9: Create “Calm Cadences”

Fast teams often create loud systems.

Disciplined teams create calm systems:

- Weekly outcome review (not status theatre)

- Risk review for launches

- Decision log for reversals

- Retro focused on system fixes

AI increases pace.

Calm cadence protects thinking.

Common execution mistakes amplified by AI

AI rarely creates brand-new failure modes.

Many delivery failures happen because AI accelerates execution without improving decision quality.

It amplifies existing ones.

Mistake 1: Vague tickets become fast garbage

If user stories are fuzzy, AI will produce “complete-looking” stories that still miss the point.

Fix: tighten the problem statement and acceptance criteria first.

Mistake 2: Teams ship “AI output” without ownership

If nobody owns the artifact, quality collapses.

Fix: every artifact needs a human owner and a verification step.

Mistake 3: Overbuilding becomes easier

AI makes it easy to implement “nice-to-have” features quickly.

Fix: enforce scope discipline through outcome evidence.

Mistake 4: Copy-paste architecture decisions

AI can propose solid architectures.

It can also ignore your constraints.

Fix: require an architecture fit check (latency, privacy, maintainability, ops).

Mistake 5: Faster code, slower stability

If AI accelerates code writing without matching test rigor, your change failure rate rises.

Fix: protect engineering fundamentals. DORA metrics exist for a reason.

Mistake 6: Productivity illusions

Multiple studies show AI can increase task speed in controlled settings, but real value depends on how work is structured and verified.

Disciplined teams treat AI as a force multiplier for good systems—not a replacement for systems.

How Disciplined Teams Respond When AI Accelerates Execution

Here’s what you’ll notice when a team is truly disciplined in an AI-driven environment.

They are outcome-literate

Every initiative ties to:

- a metric

- a user behavior

- a time window

- a validation method

They keep accountability human

AI helps produce.

Humans remain accountable.

No ambiguity.

They standardize AI usage patterns

They don’t rely on hero prompts.

They build repeatable playbooks:

- discovery synthesis prompts

- PRD draft prompts

- test generation prompts

- release note prompts

Then they embed verification.

They manage flow, not frenzy

They finish work.

They control WIP.

They protect focus.

They treat AI like a junior teammate

Helpful. Fast.

But needs review.

This framing aligns with how modern teams talk about practical AI adoption: measure impact, guard quality, and reduce rework rather than chasing hype.

Closing reflection for the week

AI will keep accelerating execution.

That part is inevitable.

But outcomes will still belong to the teams who can:

- choose the right problem

- define evidence of progress

- limit WIP

- verify quality

- stay calm under speed

This week, try one small discipline upgrade:

Remember: AI accelerates execution, but discipline determines outcomes every single time.

Add a verification step to every AI-assisted artifact.

Just one week.

You will feel the difference.

Reflection question:

Where is your team moving fast today—without clear evidence that outcomes are improving?