Vatsal Shah

Certified ScrumMaster® | Agile Technical Project Manager

AI Tool Speed vs Product Outcomes: Why Fast AI Teams Still Fail—and 9 Moves Leaders Use to Win

AI tool speed vs product outcomes is the defining leadership challenge of the AI era.

AI tools have dramatically increased the speed of execution — faster drafts, faster prototypes, faster releases. But speed alone does not create customer value. Without clear decisions and outcome ownership, AI-driven activity often results in motion without impact.

Introduction: Speed is cheap. Outcomes are rare.

AI has made it absurdly easy to produce more: more drafts, more PRDs, more prototypes, more code, more campaigns, more “progress screenshots.”

But here’s the brutal truth leaders must internalize:

AI increases speed, not clarity.

And speed without clarity creates high-velocity waste.

Many teams will look “busy” in AI-first workflows—while customer value stays flat. That’s why the leadership job is no longer “Which AI tool should we buy?”

It’s: How do we ensure AI-accelerated work translates into measurable product outcomes?

In this blog, you’ll get a practical operating model to connect AI tool speed to retention, activation, revenue, reliability, and trust—without turning your org into AI chaos.

Read this companion article first if you want a deeper “execution discipline” foundation: Vibe Coding Is Not a Developer Trend — It’s a Product and Growth Shift

AI Tool Speed vs Product Outcomes: The Gap Leaders Must Own

AI tools compress the cost of producing outputs. The danger is assuming outputs equal outcomes.

Outputs (activity) examples

- 30 user stories generated in 10 minutes

- 5 landing pages spun up in a day

- 2 prototypes built before lunch

- A “working demo” created without hard decisions

Outcomes (value) examples

- Higher activation rate

- Faster time-to-first-value

- Improved retention in week 4

- Reduced support tickets

- Lower change failure rate & faster recovery (reliability)

If your team celebrates “we shipped faster” but can’t answer what moved, you’re scaling theater.

A simple leadership lens:

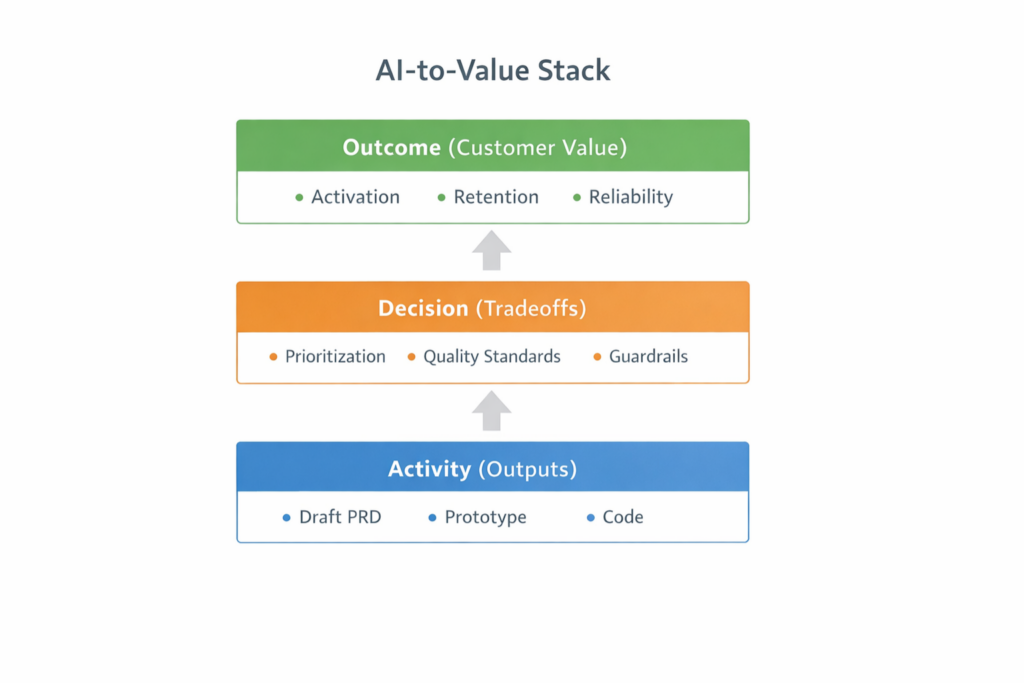

The 3-Layer Value Stack

- Activity Layer: AI produces volume (drafts, code, ideas).

- Decision Layer: Humans choose what matters (tradeoffs, priorities, sequencing).

- Outcome Layer: Customers reward you (usage, trust, revenue).

AI over-optimizes Layer 1. Leaders must protect Layers 2 and 3.

Leadership Responsibility in AI Tool Speed vs Product Outcomes

AI adoption is not an “enablement initiative.” It’s an operating model change.

The best leaders treat AI like a new form of leverage—then add guardrails so leverage doesn’t become instability.

Leadership in the AI era is not about adopting tools faster, but about closing the gap between AI tool speed vs product outcomes through disciplined decision-making.

Here are 9 leadership moves that consistently turn AI speed into value:

1) Start with “Outcome First” rules (not tool permissions)

Before enabling new AI tools org-wide, define the Outcome First Rules:

- Every AI-assisted initiative must map to one measurable outcome metric

- Every AI workflow must have a defined quality bar

- Every AI-generated asset must have an owner (accountability never becomes “the model did it”)

If a team can’t name the outcome metric, you don’t have a project—you have entertainment.

2) Upgrade your metrics: pair “speed” with “stability”

Many teams accelerate throughput and quietly destroy reliability.

A clean way to balance this is using DORA-style delivery metrics (speed + stability). DORA’s “four keys” highlight velocity and stability together.

Use these as leadership guardrails:

- Lead time for changes

- Deployment frequency

- Change failure rate

- Time to restore service

Your AI rollout is working only if speed improves without stability collapsing.

3) Create an “AI Work Intake” like product discovery

AI makes it easy for everyone to propose 50 ideas a week. That’s not innovation. That’s noise.

Create a lightweight intake with 5 filters:

- Customer pain clarity (what breaks today?)

- Value hypothesis (what changes if we fix it?)

- Signal plan (what will we measure in 7–14 days?)

- Risk check (privacy, compliance, reputation)

- Reversibility (can we roll back safely?)

This forces AI enthusiasm into product discipline.

4) Replace “roadmaps” with an “experiment portfolio”

AI-era execution rewards learning velocity, not launch vanity.

Run a portfolio with three buckets:

- Core Outcomes: retention, activation, conversion, reliability

- AI Leverage: internal efficiency (support triage, QA assist, release notes)

- Frontier Bets: risky differentiators (agentic workflows, personalization)

The rule: Frontier Bets never steal oxygen from Core Outcomes.

5) Make “quality” explicit: define what “good” looks like

AI output often looks confident—even when it’s wrong, generic, or off-strategy.

Leaders should require a Quality Definition for each AI-heavy workflow:

- Accuracy expectations (what is “acceptable wrong”?)

- Brand voice constraints (what must remain human?)

- Security boundaries (what data cannot enter prompts?)

- Review depth (when does it require expert review?)

This prevents “fast garbage” scaling across the org.

6) Treat governance as a growth enabler (not bureaucracy)

If you don’t govern AI, you’ll govern incidents.

Use a trustworthy risk framework language (simple, not legal-heavy). NIST AI RMF is a strong reference point for building practical risk thinking.

Minimum governance that keeps speed:

- Approved tool list by data sensitivity tier

- Prompt/data handling rules

- Audit trail for AI-assisted decisions in high-impact areas

- Human override policy (who can stop the line?)

Governance is not “control.” It’s permission to move fast safely.

7) Design “human + AI” roles, not “AI replaces humans”

The biggest adoption mistake is treating AI like a person.

Instead, redesign work as a pipeline:

- AI drafts → humans decide

- AI summarizes → humans interpret

- AI generates options → humans choose tradeoffs

- AI accelerates execution → humans validate outcomes

This framing reduces fear, increases adoption, and protects decision quality.

8) Make product growth the scoreboard (not AI usage)

Some teams brag: “We’re using AI in everything.”

That’s not a business outcome.

Sustainable growth emerges only when AI tool speed vs product outcomes are intentionally aligned with activation, retention, and reliability metrics.

Your scoreboard should read like:

- Activation ↑

- Time-to-first-value ↓

- Retention ↑

- Expansion revenue ↑

- Support load ↓

- Reliability ↑

McKinsey’s research repeatedly stresses that realizing value requires practices across strategy, operating model, talent, and adoption—not just tools

9) Run a 30-day “Value Conversion Sprint”

If you want immediate traction, run a focused sprint:

Week 1: Choose 2 workflows

- One customer-facing (e.g., onboarding friction)

- One internal (e.g., support triage)

Week 2: Build with guardrails

- Define success metric, review policy, rollback plan

Week 3: Ship + measure

- Instrument, compare baseline vs AI-assisted delta

Week 4: Decide

- Scale, modify, or kill

- Publish learnings internally (build organizational clarity)

This is how you avoid the “pilot graveyard.”

Real-life example: the “Prototype Trap” that kills value

A founder enables AI coding + design tools. Suddenly:

- demos multiply

- feature requests explode

- team looks insanely productive

But after 6 weeks:

- activation doesn’t improve

- retention is flat

- support issues rise

- roadmap becomes reactive

Why it happened:

AI increased output velocity, but leadership never clarified:

- Which customer problem mattered most

- What success metric defined “value”

- What quality and reliability constraints were non-negotiable

Fix: leadership returns to outcome-first, sets a single activation metric target, and forces every AI-driven build to prove impact within 14 days—or get killed.

Practical Guidance for AI Tool Speed vs Product Outcomes

Use this as an internal operating memo.

AI-to-Value Checklist

- ✅ Define 1 outcome metric per initiative

- ✅ Define baseline + expected delta

- ✅ Define quality bar + review policy

- ✅ Define risk tier + data rules

- ✅ Instrument measurement before shipping

- ✅ Run a 7–14 day signal window

- ✅ Scale only after outcome proof

- ✅ Keep speed + stability balanced

Recommended internal links from your blog

- Vibe Coding Is Not a Developer Trend — It’s a Product and Growth Shift

https://vatsalshah.co.in/vibe-coding-product-growth/ - AI Signals for Leaders: Improve Decision Quality Without Panic

https://vatsalshah.co.in/ai-signals-for-leaders-decision-quality/ - Why Product Leaders Must Rethink Growth in the Age of AI

https://vatsalshah.co.in/product-growth-strategy-in-the-age-of-ai/ - AI Signal vs Noise Framework: How Leaders Make Better Decisions

https://vatsalshah.co.in/ai-signal-vs-noise-framework/

Conclusion: Mastering AI Tool Speed vs Product Outcomes

The real winners in the AI era will not be the fastest teams, but the leaders who consistently master AI tool speed vs product outcomes.

AI makes it easy to move fast.

Leadership makes it meaningful to move right.

If you do the 9 moves above, you’ll build a culture where:

- AI accelerates execution

- humans protect judgment

- outcomes stay measurable

- speed doesn’t destroy trust

Your next leadership question:

Where is your team producing more AI-powered activity—but still failing to move a single core product outcome?